Artificial intelligence is steadily infiltrating domains once considered uniquely human. It writes, reasons, and innovates—often with a precision that should unsettle us. But how do we define this “superiority”? Does it merely mean speed, efficiency, and flawless execution?

Or are we sacrificing what makes us human—intuition, creativity, the spark of irrational genius? If machines handle all the thinking, who remains to imagine? Over-reliance on AI in decision-making could lead to a lack of human oversight and understanding, potentially resulting in decisions that lack empathy or consideration for the human context.

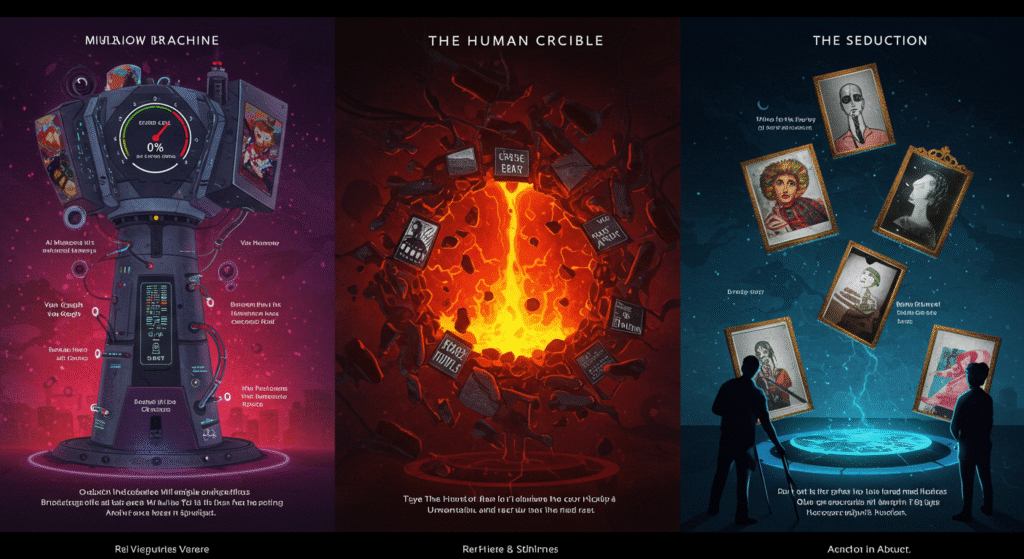

The Limits of AI’s “Objectivity”

For centuries, philosophers have debated the concepts of intelligence, creativity, and wisdom. Plato idealized a realm of pure forms—truth, beauty, justice—existing beyond human perception. By that standard, AI appears to be the ultimate scholar: it detects patterns flawlessly, processes data endlessly, and operates without fatigue or bias (in theory). But does it understand? Or is it just an advanced mimic, dazzling us with statistical sleight of hand?

The problem lies in how we measure success and the limitations of AI in truly understanding the human experience.

We judge AI by quantifiable metrics, including accuracy, speed, and consistency. Yet true brilliance—the kind that drives art, science, and breakthrough ideas—transcends these benchmarks.

- AI can draft a perfect lesson plan, but does it inspire students?

- It can generate a data-driven business strategy, but can it navigate office politics or cultural nuances?

- It can compose a symphony, but has it ever felt the ache of heartbreak or the rush of inspiration?

We evaluate AI by its output because the output is measurable. But human achievement is about more than results—it’s about context, intent, and the messy, unpredictable process of creation. The context in which a human creates, the purpose behind their creation, and the often chaotic and unpredictable process of bringing an idea to life are all elements that AI struggles to replicate. AI excels at remixing existing patterns, but when has it ever shattered them?

The Illusion of Creativity

Great artists don’t just assemble words or brushstrokes—they channel something intangible. Hemingway wrote with raw intensity, Didion with surgical precision, Kafka with haunting unease. Their work didn’t just follow rules; it bent them.

AI can craft elegant metaphors or mimic famous styles, but where’s the hunger? Has it ever lost sleep over an unsolvable idea?

Nietzsche would’ve scoffed at AI’s “genius.” “There are more things in heaven and earth than are dreamt of in your philosophy.” Machines can calculate and simulate, but can they disrupt? Where’s the line that upends an era, the idea that forces humanity to rethink everything?

This extends far beyond art.

- A CEO who only executes optimized strategies isn’t a visionary—just a competent manager. Steve Jobs didn’t just build gadgets; he understood human desire.

- A teacher who only recites facts isn’t educating—they’re a voice-activated textbook.

Knowledge without passion is just data.

So the real question isn’t whether AI will surpass us. It’s whether it can redefine reality—not just reflect it. Will it ever produce something that shakes us to our core? Or will it remain a polished echo of the past?

The Seduction of Convenience

Every AI breakthrough shifts the goalposts. First, we said, “If a machine can write, it’s intelligent.” Then came GPT-4, GPT-5, Gemini—each more convincing than the last. Now, we’re left asking: But does it truly understand what it’s saying?

Welcome to the infinite loop of AI progress.

We build increasingly sophisticated models, yet the core dilemma remains: When does statistical prowess become consciousness?

John Searle’s Chinese Room thought experiment feels eerily prescient. Modern AI doesn’t “understand” language—it manipulates it probabilistically. A large language model (LLM) predicts the next word based on trillions of data points. It’s astonishingly effective, but is it thinking?

Here’s the paradox:

The more realistic AI becomes, the harder it is to distinguish simulation from sentience.

- GPT-4 drafts legal contracts.

- Chatbots like Pi or Claude simulate empathy.

- Midjourney generates art that stirs emotions.

Yet all of it is pattern recognition, not lived experience. Every test we give AI is a test we designed—a mirror of our own biases. Maybe the real Turing test isn’t whether AI can fool us, but whether we’re fooling ourselves.

The Quiet Threat: Cognitive Complacency

We treat AI as a “better human”—faster, smarter, infallible. But that’s the trap. AI isn’t human. It’s something else entirely. And that’s where the real risk lies.

It’s not just about delegating tasks to machines, it’s about relinquishing the very act of thinking itself. The potential loss of human skills is a cause for concern.

- Calculators replaced mental math.

- Google replaced memory.

- GPS replaced navigation.

Now, we’re on the verge of surrendering our creativity, strategy, and even moral reasoning to AI. But these are not just skills; they are the essence of what makes us human. What happens when we forget how to think—or worse, how to feel?

Emotions are not some mystical force; they are the result of cognitive labor. Our brains interpret, weigh meaning, and react. But if AI starts dictating our emotions—deciding what angers us, moves us, or inspires us—then feeling becomes just another engineered product. Optimized for clicks. Tailored for ad revenue. The real danger isn’t that AI will outthink us. It’s that we’ll stop thinking and feeling altogether.

Share this content: